Why LLMs might actually lead to AGI

, 12 min read

Type “LLMs will never become AGI” into Google, and you will find dozens of essays, some from top experts in the field.

Ilya Sutskever is encouraging young researchers to think new ideas, while Francois Chollet is struggling to come up with a benchmark that can prove when AGI is here. Yann LeCun straight-away declares that scaling LLMs is just complete BS.

Meanwhile, Sam Altman promises AGI in 2025, and Dario Amodei dreams about Machines of Loving Grace. At the same time, Geoffrey Hinton and Yoshua Bengio warn about extreme risks.

The world’s brightest minds don’t exactly agree on the topic. Partly it’s because they all have different incentives. Another part is that even though many intuitively get what AGI means, we still debate endlessly about its definition.

I don’t think it’s useful to define AGI here. It’s like “what is love” in a way. Ask 10 people and you will get 14 different answers, but you still know if you love your mom. Most agree that cats are intelligent and LLMs are not. If you really need a definition, here is Wikipedia’s.

About feasibility, I assume AGI is achievable. There’s no good reason to think it’s impossible, and things that are possible and and highly incentivized tend to eventually happen. If the Wright brothers didn’t invent airplanes, someone else would have. So, to me, the main confusing question was how we get to AGI.

With that in mind, I think I finally understand how LLMs could actually lead us there.

AI progress is bounded by research speed and energy

Before getting into the path from LLMs to AGI, I will make a simple claim that I couldn’t prove wrong so far:

AI progress is bounded by research iteration speed and the total amount of energy available for computation.

Research speed means how quickly we can test ideas and make them work in practice. Think of it as the time between researchers conceptualizing “thinking models”, and o1 showing on ChatGPT.

Better algorithms, evals, and model architectures are all research outputs that give us more “intelligence”. New chips, scaling methods, and infrastructure optimizations (also research), give us more FLOPs per watt.

Energy for computation means how many watts we spend for AI. Building more datacenters increases our total available wattage.

Since AI is math operations at scale, more FLOPs means faster iteration. Higher FLOPs come from research, and more wattage from datacenter capacity. The combination of the two gives us how many operations we can do, or, how many things we can try.

Everything is reduced to two variables: research speed and energy.

Note: In this post, I will focus on research speed.

Quick detour: The data problem is not real

Skeptics claim that AI progress will slow down because we fed LLMs the entire internet and are running out of data. This is false. Other people made a better case, but here’s my own summary:

- We haven’t fed trained on the full entire internet yet.

- Today’s AIs train on a tiny fraction of all available data.

- There are massive amounts of non-internet data that haven’t been combined to train a single model yet.

- State of the art “world models” still don’t use information from the physical world, where all digital data came from. When AI can collect and train on real-world data directly, we’ll see a steep leap forward.

- Humans and animals are trained with much less data and energy than LLMs. We are living proof that it’s possible to get better intelligence without more data. We just don’t know how, yet.

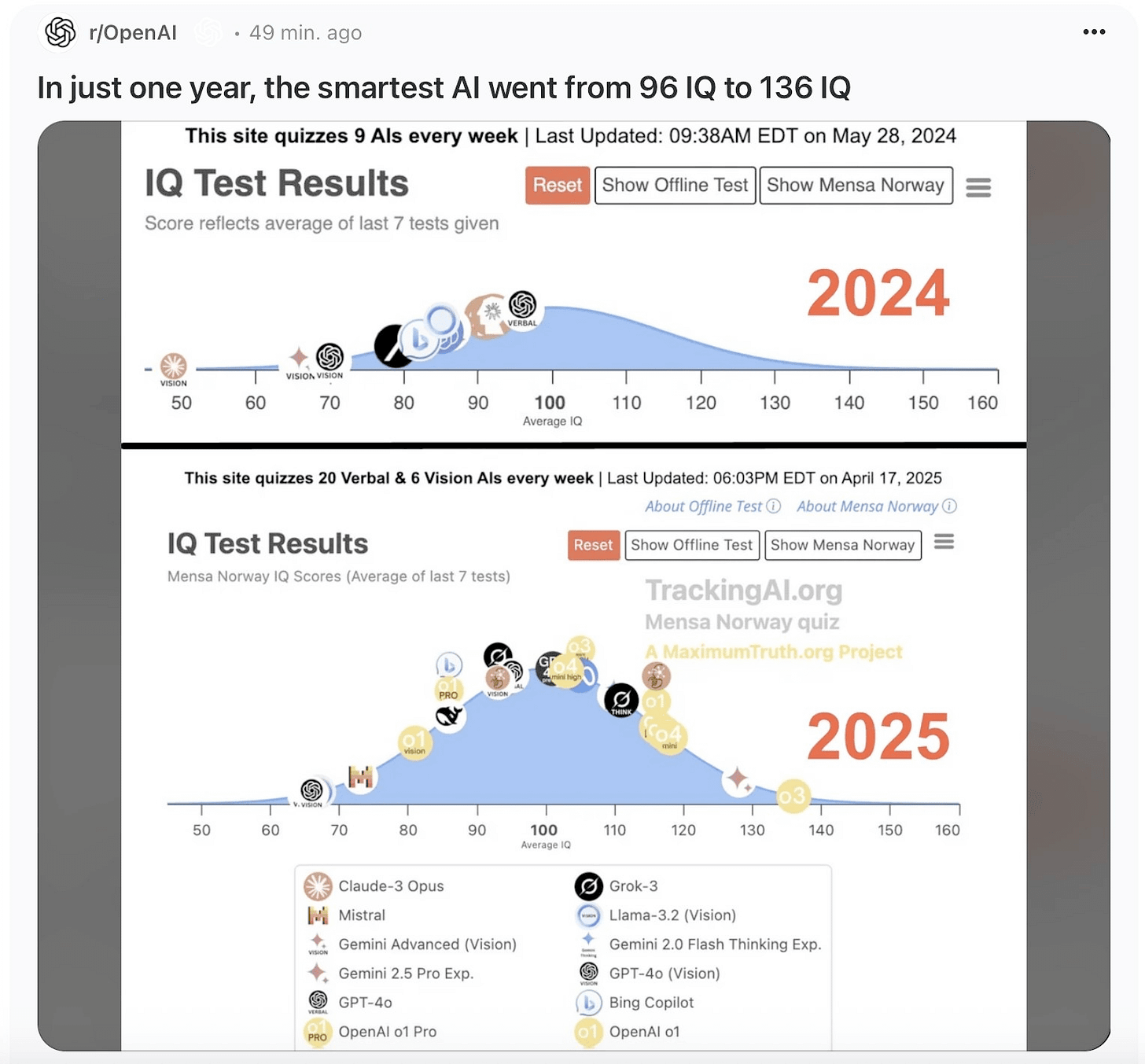

What changed within a year

LLMs today can easily make beautiful landing pages and demo apps, contribute to existing codebases, and perform basic tasks in containerized computer environments like ordering pizza or booking tickets. As almost everyone experienced a month ago, AI can now turn any picture into a Ghibli scene and follow visual instructions surprisingly well.

These tasks sound boring, but that’s because the more hype AI gets, the higher our expectations go. A year ago, none of this worked 1. We didn’t even have multimodal or thinking models yet, both of which were instrumental in making agents possible 2.

Within less than a year, we got from AI barely completing toy tasks, to autonomous delivery of economically valuable work.

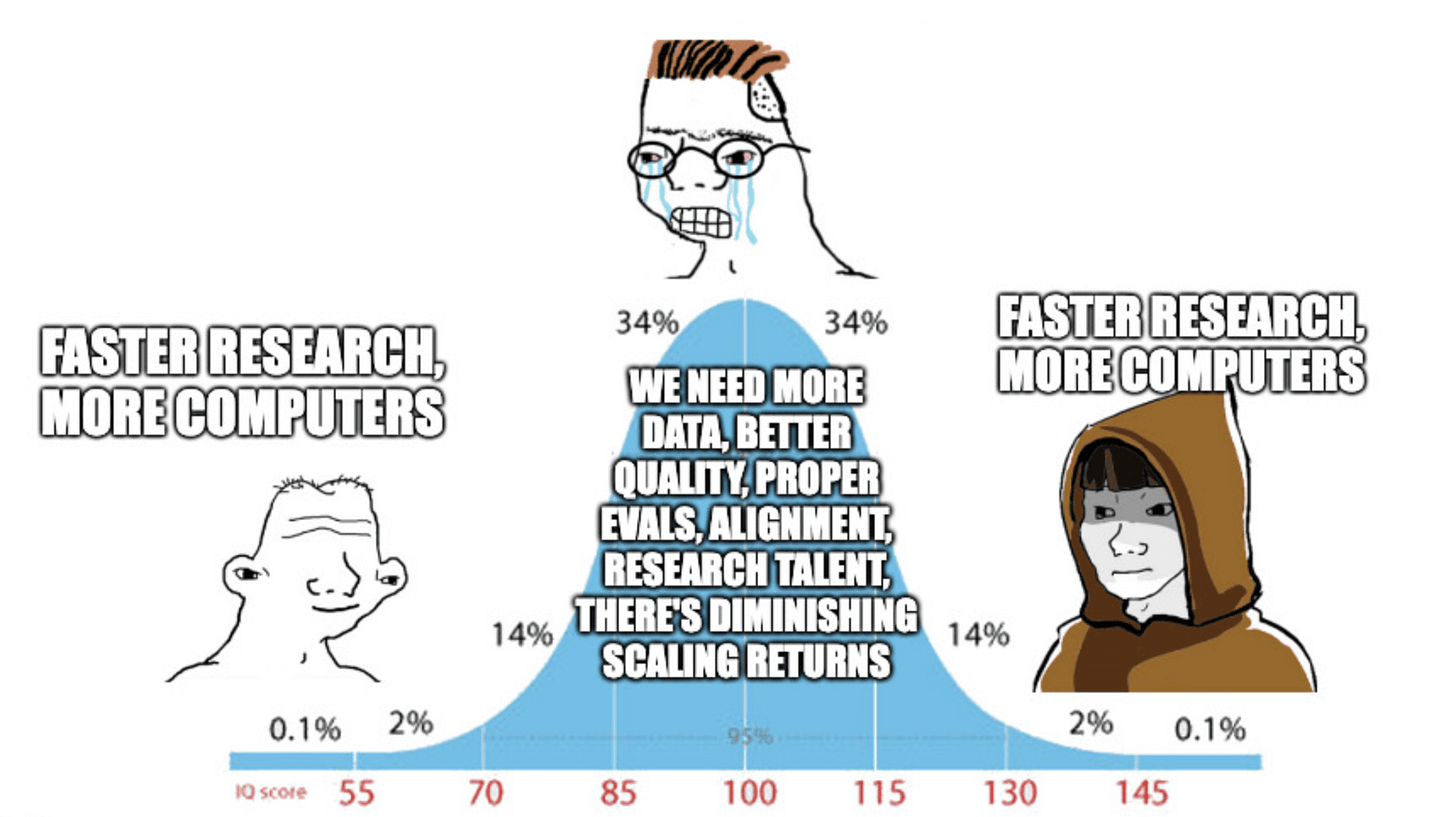

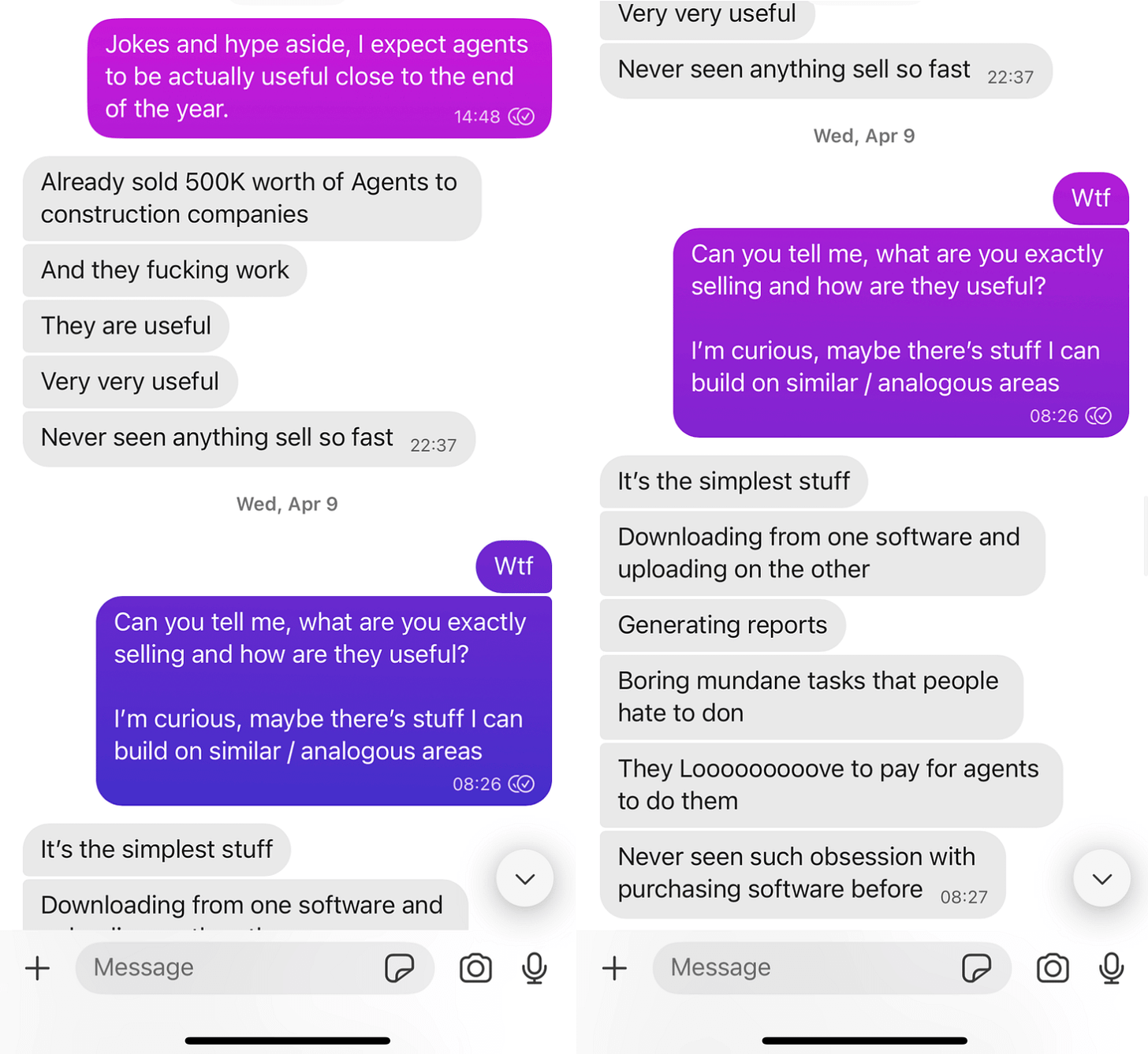

The two images below succinctly summarize the progress:

I’m often skeptical when the internet screams about the latest AI announcements being a game changer, but here is my friend’s startup making €500K sales on agents to construction companies out of all people (civil engineering is a very conservative field).

I’m often skeptical when the internet screams about the latest AI announcements being a game changer, but here is my friend’s startup making €500K sales on agents to construction companies out of all people (civil engineering is a very conservative field).

But what does this have to do with AGI? Yes, models are improving fast, but a cat is still, in some ways, more intelligent than an LLM.

I suspect that no person on earth today has any concrete idea how to get to AGI. But we still have a simple path to get there.

AI research is boring work

Lore around AI research has grown to mythical proportions. I’m told that there are those who believe Ilya Sutskever and Noam Shazeer enter transcendent states of consciousness, finding the next great idea during out-of-body experiences in direct communication with the Universe.

Yes, brilliant moments do exist. When you are on the whiteboard with colleagues, rapidly exchanging ideas in harmonious synchrony. Or when you are locked-in with your noise-cancelling headphones, inventing a new model architecture.

But there’s one bit I grossly overlooked when I doubted why labs invest so much in LLMs:

AI research is often embarrassingly boring work.

Ask any researcher. On good weeks, they get a few hours of high-leverage, creative work.

Part of the “problem” is being human: we are can’t stay in creative flow all the time. But most of the problem is bullshit tasks. Recovering from failed training runs, fixing broken pipelines, debugging sharding issues. These aren’t creative problems, and they definitely don’t require exquisite research insight, taste, or brilliance. And humans are bad at these tasks, because you often need to look at lots of different places, and there’s too much information to parse.

The point is:

We are not out of ideas. People have tons of ideas and too little time to try them out. The boring, repetitive work is the bottleneck to progress.

In fact, AI labs are currently locked in a race, “forced” to double down on ideas already proven to work. It’s a tough dance to balance: if they don’t get short-term results, they will fail to raise more money, and go bankrupt. This makes exploratory research a lower priority. And that’s exactly the kind work that leads to breakthroughs.

LLMs are a shortcut to AGI

At this point, you should be able to put the pieces together. If AI progress is bounded by research speed, and most of research is boring work… we should be able to automate it.

Humans are good at having ideas, but exploring multiple similar approaches, parsing lots of information, and debugging are slow and tedious tasks. Machines are excellent at exploring variations, parsing logs, and crunching data, but they don’t know how to interpret the results. The two complement each other.

Which leads us to the main point of this whole thoughtstream:

The masterplan was never to magically turn LLMs into AGI. It’s to use LLMs as research accelerators. LLMs will do the gruntwork that humans are bad and slow at, freeing us to focus on what we’re good at: exploring ideas.

If AGI is a diamond hidden in a massive maze, we now have a few highly trained humans crawling through with flashlights. LLM agents would let each researcher supervise a thousand explorations in parallel.

And we are almost there. The only remaining piece is having agents that can handle the boring parts of research.

Early tools like Manus AI and Replit already do useful work. Google is training AI co-scientists and I would be surprised if the others are not exploring similar ideas. My current guess is that the big AI labs will be deploying agents that can do basic research tasks before the end of the year. After that, it will probably take another year for these agents to automate significant parts of the process.

Once LLM agents can run experiments, summarize logs, and debug pipelines, many bottlenecks are gone. We can try more ideas. Good ones get refined faster. That leads to better, more autonomous models, and the whole system accelerates.

Maybe this leads to AGI. Probably it just leads to much better models. Either way, our chances to find the next breakthrough increase.

LLMs are the most straightforward way to meaningfully speed things up.

Notice that there is no magic ingredient here and, importantly, no hand-wavy statements like “AI will recursively improve itself leading to intelligence explosion.” All you need to believe is that LLM agents can automate boring work. This would already be enough for transformative AI improvements and economic growth.

Where this leaves us

Do I know for sure that LLMs will lead to AGI? No. But am I confident that they will massively accelerate research? 100%.

This takes care of the speed constraint for progress.

Thanks to intellectual elites prophesysing increasing tensions between countries, it seems that the world’s powers (US, China) are in a full-throttle race to build datacenters and power plants. That’s the energy constraint.

As long as speed and energy are progressing, we are getting closer to AGI. Simple as that.

The whole explanation is not particularly fancy, and that’s exactly why it didn’t even occur to me for so long. It felt too simple an explanation for the seemingly uncrossable gap between LLMs and AGI. No fireworks, no magic ingredient.

Maybe this should have been obvious all along:

A simple plan you can follow beats a genius one you don’t.

Since it took me this long to understand AGI believers, I don’t feel qualified at all to reason about ASI or alignment. They are too vague. I believe that as AI gets better, what we need to do will become clearer, but many would argue this is naive.

There’s another question that bothers me now: since we are entering a period of explosive growth soon, what’s the highest leverage plan I should be executing on now?

My current favorite 2025 meme echoes in the back of my head:

“it is sometimes better to abandon one’s self to destiny”

“it is sometimes better to abandon one’s self to destiny”

Thanks to Masha Stroganova and John Carpenter for reading drafts of this.

Notes:

- Everything I write here are my own opinions. I am not a researcher, I’m an engineer building infrastructure.

- Essays you can read about AI predictions:

- What 2026 looks like, written in 2021 by Daniel Kokotajlo. It got many things right astonishingly right, but it was thankfully too pessimistic about social predictions.

- The Scaling Hypothesis, written between 2020 and 2022 by Gwern. This was one of the core works that reinforced the idea that scaling results in new capabilities, emergent properties not found in smaller scale.

- Situational Awareness, written mid-2024 by Leopold Aschenbrenner.

- AI 2027, released a few weeks ago by Daniel Kokotajlo, Scott Alexander, and a few others. I read this midway through writing my post. Seeing a similar narrative made me a bit more confident in mine. I think (hope?) that their social predictions are too pessimistic, similar to Daniel’s past essay.

-

You can probably point out toy cases where models could kind of build end-to-end code, or some GitHub repo that attempted “agentic” behavior. In practice, the models needed a lot of handholding, they would fail a lot, and they could only tackle much simpler problems. ↩

-

GPT 4o was released on May 13 2024, and o1 started previewing on September 12. ↩